Every new technology changes how people work — but rarely what they work toward. When the first tractors appeared in the early 1900s, they transformed agriculture overnight. Fields grew larger. Harvests came faster. Productivity soared. But farming still relied on human judgment — what to plant, when to pivot, how to adapt when conditions shifted. Tractors multiplied output, but people learned to guide them, improving the quality of every harvest.

According to a mabl report, more than 55% of organizations now use AI in testing. Yet, a Katalon survey found 82% of QA professionals still perform manual testing daily.

Even when automation handles scale, humans define what quality feels like. That balance — AI for precision, humans for decisions — is what builds trust in a product.

The Human Context of Quality

Manual testing is about empathy, not repetition. It's the ability to sense when a feature technically passes but emotionally fails. Automated scripts can confirm that a button exists and operates. A human tester notices the button:

- Overlaps text in Spanish localization

- Disappears in dark mode

- Appears before form completion

Human intuition matters as software reaches more people, devices, and cultures. A tester's instinct asks why someone would tap here instead of there. This instinct is the final barrier between working code and a satisfying product.

Where AI Excels

One thing is clear: AI has value in quality assurance testing. It's reshaping how QA teams operate. AI testing tools automatically create test cases, update scripts after UI changes, and predict where failures might occur. It's paying off — mabl found that 70% of mature DevOps teams report faster release velocity thanks to AI.

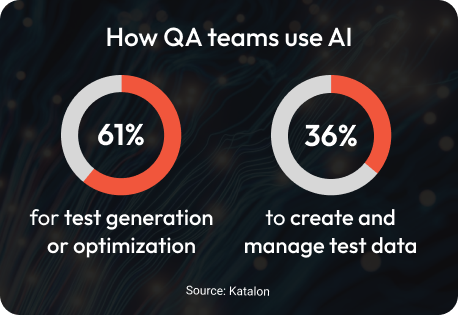

It doesn't make sense to ignore such results, which is why the industry is embracing AI. Modern automated testing tools powered by machine learning are now central to nearly every QA automation strategy. Katalon's survey shows that 61% of QA teams use AI for test generation or optimization. Another 36% apply it to create or manage test data. That's real progress; that's not about replacing people but about removing what holds them back.

AI excels at scale. It doesn't tire, forget, or lose focus after the hundredth regression cycle. It can monitor performance trends, run parallel tests, and surface anomalies faster than any human-led testing process could.

The result is tighter feedback loops and more confident deployments. But speed without discernment isn't the goal. The best QA outcomes happen when automation clears the noise so testers can hear the signal. That's where collaboration begins.

Preparation, Execution, and Insight

AI handles the mechanical side of testing exceptionally well. It generates, prioritizes, and maintains test cases—and even spots high-risk patterns. What it can't do is decide what's worth testing or what outcomes truly matter to users. That's where human testers bring context.

A skilled tester understands business logic, regulatory details, and design intent. Algorithms can't find those insights from data. AI might flag a layout change as a failure; a human recognizes it as part of a redesign.

The same collaboration plays out during execution. AI engines manage the heavy lifting of regression and performance testing. Testers focus on the unpredictable aspects and think like users.

Testers intentionally overlook documentation, like users. They click the unclickable buttons, like users. They push the product beyond its safe limits. Just like users.

That's how you make a product shine.

When to Use AI vs. Manual Testing

The strongest QA teams find balance. Here's how AI and manual testing contribute to releasing a product that works and feels right:

AI generally takes the lead in:

- Regression testing — rerunning established tests across versions to catch repeated defects.

- Performance and load testing — optimizing response time, scalability, and stability using AI-enhanced analysis and prediction.

- Cross-browser and cross-platform validation — ensuring consistency across environments.

- Test data generation — creating diverse data sets for different scenarios automatically.

- Risk-based prioritization — analyzing defect patterns to decide which tests to run first.

- Self-healing automation — updating scripts automatically when UI or element changes occur.

Human testers are indispensable for:

- Exploratory testing — discovering issues through curiosity, intuition, and creative misuse.

- Accessibility testing — interpreting how design, language, and interaction affect real users.

- Cross-device experience testing — understanding tactile, visual, and contextual differences that no machine can feel.

- Compliance and regulatory validation — using AI to accelerate rule-based checks while relying on human expertise to interpret and verify compliance in real-world contexts.

- User experience evaluation — detecting friction or confusion that metrics alone can't describe.

- AI oversight and validation — reviewing automated results and model behavior for accuracy, bias, and user-impact alignment.

The Future Is Collaborative

The future of testing is not automated. The future of testing is augmented. AI accelerates processes and reduces maintenance. As a result, human testers are shifting from task execution to strategy planning. Their role now centers on asking better questions:

“Are we testing what matters most?”

“Does this outcome reflect our users' reality?”

“Will this make the experience better for real people?”

As Logiciel noted in its 2025 analysis, manual testing is increasingly focused on exploratory, compliance, and empathy-driven work — the areas AI still struggles to replicate.

Putting Human Insight to Work

A century ago, machines changed how we worked. Today, AI is doing the same. What hasn't changed is the need for human judgment to guide the process — to make sure what's possible still feels right.

At PLUS QA, that's what our testers do every day. They use real devices and real-world insight to shape technology around people, just as innovators always have. Discover how to integrate AI into your testing strategy while preserving the human touch in QA with our comprehensive guide, The Impact of AI on Software Testing.

Contact our team to learn how PLUS QA's manual testing services can build upon your automation efforts — so progress still feels human.